T

Taylor Hatmaker

Guest

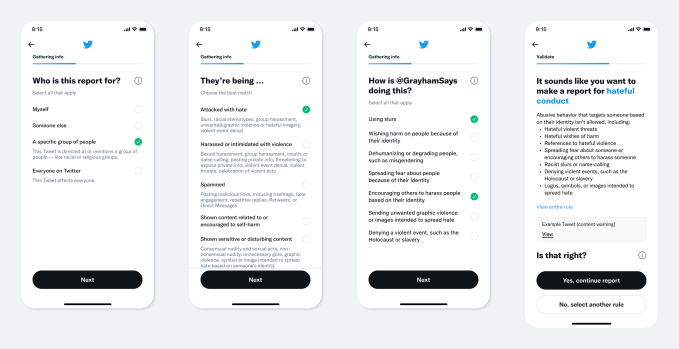

Twitter is trying out some changes to the way that users report tweets that they believe might break Twitter’s rules.

Like most social media companies, Twitter has long relied on user reporting to flag potentially policy-violating tweets. But in its new imagining of that system those reports would provide the company with a richer picture of behavior on the platform rather than just a way to evaluate individual incidents in isolation.

Image Credits: Twitter

Twitter is currently testing the new system with a small test group of U.S. users but has plans to release it to a broader swath of the platform starting in 2022. That system replacing the existing prompts that Twitter users see when they opt to report content on the platform.

One of the biggest changes is that users will no longer be asked to specify which of Twitter’s policies they believe a tweet is violating — instead, they offer information and Twitter uses automated systems to suggest what rule a given tweet might be breaking.

The user that reported a tweet can either accept that suggestion or say that it isn’t right — feedback that Twitter can further use to hone its reporting systems. The company likens this to the difference between going to the doctor and describing your symptoms vs. self diagnosing.

“What can be frustrating and complex about reporting is that we enforce based on terms of service violations as defined by the Twitter Rules,” Renna Al-Yassini, Senior UX Manager on the team said. “The vast majority of what people are reporting on fall within a much larger gray spectrum that don’t meet the specific criteria of Twitter violations, but they’re still reporting what they are experiencing as deeply problematic and highly upsetting.”

Twitter hopes to collect and analyze reports that fall into that gray area, using it to give the company a snapshot of problem behavior on the platform. Ideally, Twitter can identify emerging trends in harassment, misinformation, hate speech and other problem areas as they emerge, rather than after they’ve already taken root. The company declined to comment on if the changes would require hiring more human moderators, stating that it would use a blend of human and automated moderation to process the information.

The company also wants to improve the reporting process for users, “closing the loop” and making the reporting process more meaningful, even if a given tweet doesn’t prompt enforcement action. The new system aims to resolve some common complaints that the company identified through research on how to make the platform safer.

“It helps us address unknown unknowns,” said Fay Johnson, Twitter’s director of product management on the health team. “… We also want to make sure that if there are new issues that are emerging — ones that we may not have rules for yet — there is a method for us to learn about them.”

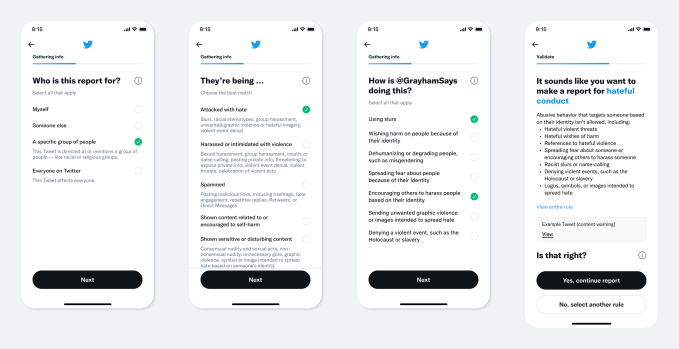

Like most social media companies, Twitter has long relied on user reporting to flag potentially policy-violating tweets. But in its new imagining of that system those reports would provide the company with a richer picture of behavior on the platform rather than just a way to evaluate individual incidents in isolation.

Image Credits: Twitter

Twitter is currently testing the new system with a small test group of U.S. users but has plans to release it to a broader swath of the platform starting in 2022. That system replacing the existing prompts that Twitter users see when they opt to report content on the platform.

One of the biggest changes is that users will no longer be asked to specify which of Twitter’s policies they believe a tweet is violating — instead, they offer information and Twitter uses automated systems to suggest what rule a given tweet might be breaking.

The user that reported a tweet can either accept that suggestion or say that it isn’t right — feedback that Twitter can further use to hone its reporting systems. The company likens this to the difference between going to the doctor and describing your symptoms vs. self diagnosing.

“What can be frustrating and complex about reporting is that we enforce based on terms of service violations as defined by the Twitter Rules,” Renna Al-Yassini, Senior UX Manager on the team said. “The vast majority of what people are reporting on fall within a much larger gray spectrum that don’t meet the specific criteria of Twitter violations, but they’re still reporting what they are experiencing as deeply problematic and highly upsetting.”

Twitter hopes to collect and analyze reports that fall into that gray area, using it to give the company a snapshot of problem behavior on the platform. Ideally, Twitter can identify emerging trends in harassment, misinformation, hate speech and other problem areas as they emerge, rather than after they’ve already taken root. The company declined to comment on if the changes would require hiring more human moderators, stating that it would use a blend of human and automated moderation to process the information.

The company also wants to improve the reporting process for users, “closing the loop” and making the reporting process more meaningful, even if a given tweet doesn’t prompt enforcement action. The new system aims to resolve some common complaints that the company identified through research on how to make the platform safer.

“It helps us address unknown unknowns,” said Fay Johnson, Twitter’s director of product management on the health team. “… We also want to make sure that if there are new issues that are emerging — ones that we may not have rules for yet — there is a method for us to learn about them.”